Eighteen years ago, before “GPU acceleration” and “AI data center” became household terms, a small hi-tech company changed the rules of cryptography. In 2007, we unveiled a radical idea – using the untapped power of graphics processors to recover passwords, which coincided with the release of video cards capable of performing fixed-point calculations. What began as an experiment would soon redefine performance computing across nearly every field.

What Happened in 2007

In early 2007, we filed a U.S. patent for a technology that would forever change password recovery. At a time when GPUs were thought of purely as graphics engines, Elcomsoft engineers found a way to pair them with CPUs to dramatically accelerate cryptographic calculations. This discovery came just as NVIDIA officially released CUDA, the first toolkit that opened GPUs to general-purpose computing, and together these developments marked the start of a new era in computational performance.

Our in-house implementation of this concept led to Elcomsoft Distributed Password Recovery (EDPR) – the world’s first commercial product to harness GPU acceleration for cryptanalysis. What once required months of CPU-based brute-force computation could now be completed in days. Eighteen years later, GPU acceleration underpins everything from artificial intelligence to scientific modeling, but in 2007, it was Elcomsoft that first demonstrated its transformative potential in password recovery.

Beyond Gaming: The Rise of GPU Acceleration Everywhere

When we first demonstrated the potential of GPU acceleration in 2007, graphics cards were still designed almost exclusively for rendering dynamic 3D scenes in computer games. Over the following decade, however, GPUs evolved from specialized visual processors into fully programmable parallel computing engines. With thousands of lightweight cores capable of performing the same instruction on vast amounts of data, GPUs became ideal for workloads that rely on massive parallelism – transforming them into indispensable tools for modern computing far beyond their gaming roots.

What began as a niche technology has become the cornerstone of high-performance computing. The same parallel processing principles that speed up password recovery now fuel breakthroughs in science, finance, media, and AI. In less than two decades, GPUs have transitioned from the periphery of gaming rigs to the very heart of global innovation – redefining what’s possible in both everyday computing and the most advanced data centers.

Today, GPUs power some of the most demanding computational tasks across industries. In artificial intelligence and machine learning, they train neural networks that recognize speech, generate images, and power large language models. In scientific computing, GPUs accelerate molecular simulations, climate modeling, and astrophysics calculations once limited to supercomputers. Creative professionals rely on them for video rendering, encoding, and real-time effects, dramatically reducing production times. The world of cryptography and blockchain also owes much to GPU computation – from cryptocurrency mining to cutting-edge privacy technologies like zero-knowledge proofs. Even data analytics and big-data platforms now use GPU acceleration to process queries at interactive speeds, with toolkits such as NVIDIA RAPIDS and OmniSci making it accessible to mainstream users.

How GPU Acceleration Works

A typical desktop or workstation relies on two very different types of processors: the CPU (Central Processing Unit) and the GPU (Graphics Processing Unit) found in a video card. A CPU is designed for versatility; it contains a few powerful cores, each capable of handling a wide variety of instructions in sequence. This makes it ideal for general-purpose tasks such as running an operating system, executing application logic, or managing data flow. A GPU, on the other hand, contains tens of thousands of much smaller and simpler cores. Instead of running different operations on each core, a GPU executes the same instruction across many data points simultaneously, making it extremely efficient for highly parallel tasks such as image rendering, matrix multiplication, or password hash calculations.

This difference in design is often described as SIMD – Single Instruction, Multiple Data. In simple terms, the GPU repeats one operation (for example, adding two numbers) on large sets of data at once. A modern high-end graphics card like the NVIDIA RTX 4090 contains around 16,000 CUDA cores, while a high-end CPU such as the Intel Core Ultra 9 285K typically has only 24 threads – with only a handful executing on the powerful P-cores. Each GPU core is slower and less flexible than a CPU core, but when tens of thousands of them work together, they can outperform CPUs by orders of magnitude for the right type of workload.

However, this performance comes with limitations. GPUs are highly specialized and depend on regular, repetitive data patterns to achieve their speed. They cannot efficiently handle branching logic, complex decision trees, or irregular data structures – tasks that CPUs manage easily. Programming GPUs also requires specialized tools and languages such as CUDA (for NVIDIA cards) or OpenCL, which allow developers to adapt their algorithms to the GPU’s architecture. In practice, the best systems use both: CPUs coordinate the process and handle diverse logic (such as generating the flow of passwords to try based on complex masks and rules), while GPUs execute the heavy parallel computations (such as hashing the bunch of passwords fed by the CPU) that give modern computing its extraordinary acceleration.

Not as Simple as It Sounds

In practice, using GPUs efficiently is far more complex than simply offloading work from the CPU. Different hardware units perform differently depending on the hash algorithm, memory bandwidth, and system load; it is important to balance the different system components for reaching peak performance. For instance, EDPR recently introduced an Intelligent Load Balancing mechanism designed to handle this complexity. The system automatically analyzes each computing component – CPUs, dedicated GPUs, and even integrated graphics – to determine how tasks should be distributed for optimal throughput. Instead of assigning workloads based on static hardware specifications, the load balancer dynamically evaluates performance in real time, prioritizing powerful GPUs while utilizing CPU cores only when beneficial. This approach minimizes idle time, balances computation between heterogeneous devices, and ensures that every piece of hardware contributes effectively. The result demonstrates that true GPU acceleration requires more than raw processing power – it depends on intelligent coordination between all available resources.

Another practical challenge lies in handling fast algorithms where throughput reaches millions of password candidates per second. When password generation takes place on the host CPU, this step alone can become a serious bottleneck. Even a simple brute-force increment routine – the basic process of iterating through password combinations – must often be executed directly on the GPU to maintain efficiency. Implementing such logic in parallel, however, is not straightforward: synchronization, thread management, and data transfer overhead must all be carefully optimized to avoid losing the speed advantage that GPU computation provides. Dictionary-based attacks, password templates and script-based rules add further complexity, as managing large wordlists and transformations across thousands of threads requires meticulous planning and complex strategies.

Some password formats introduce additional complications. Algorithms used in WPA or Lotus Notes, for example, include some stages that can be run on a GPU, but other parts depend on CPU-bound processing. In these cases, the GPU cannot work independently – it must wait for the CPU to complete its portion of the calculation. If the CPU is underpowered or inefficiently utilized, the GPU’s potential is wasted, sitting idle while data is being prepared. Achieving balanced performance requires fine-tuned coordination between both processors and often benefits from a powerful host CPU to feed data fast enough.

NVIDIA CUDA: Higher Speeds, Greater Efficiency

Without a doubt, the development environment itself plays a significant role. On NVIDIA hardware, maximum performance is almost always achieved using CUDA, which allows low-level access to the GPU’s architecture and optimizations unavailable in generic frameworks. While this approach sacrifices cross-platform compatibility, this trade-off it provides greater computational efficiency. With CUDA, however, there is a trade-off: each new generation of NVIDIA hardware requires a new generation of CUDA, and CUDA itself can impose new limitations and system requirements. Here’s one example: the Blackwell generation of NVIDIA GPUs (the 5000 series boards such as the top consumer graphics card NVIDIA GeForce RTX 5090 or the powerful workstation board NVIDIA RTX PRO 6000 Blackwell) requires CUDA 12.8 or newer (currently, CUDA 12.9 and 13.0 are available). However, in these versions of CUDA, NVIDIA dropped 32-bit support, requiring 64-bit compile. This is the reason why Elcomsoft Distributed Password Recovery, a 32-bit tool, currently does not support the latest CUDA and, as a result, the latest generation of NVIDIA cards.

The 64-bit edition of Elcomsoft Distributed Password Recovery is currently in active development – a project that has been ongoing for more than three years. This update goes far beyond a simple user interface rewrite: it involves a complete modernization of the underlying architecture. The product relies on hundreds of plug-ins, each responsible for processing a specific data format, many of which were originally written for 32-bit systems with extensive low-level assembler optimizations. Porting and re-engineering these performance-critical components for modern 64-bit CPUs is a complex and time-consuming process, requiring careful adaptation to new instruction sets.

The Ugly Side of NVIDIA CUDA

But why CUDA is so much faster on NVIDIA than open-source APIs, and why those same APIs work much better on competing brands? Here comes the ugly side of CUDA: the closed source, lack of disclosure, and licensing restrictions.

NVIDIA’s approach to GPU computing has always favored a closed, proprietary ecosystem. While the company provides developers with CUDA, a truly exciting, mature and highly optimized programming framework, it does not publicly disclose the low-level GPU instruction set architecture (ISA) used by its hardware. Instead, CUDA code is compiled into an intermediate form called PTX (Parallel Thread Execution), which is then translated by NVIDIA’s closed-source drivers into actual machine instructions. This internal translation layer and the fact that the underlying ISA, scheduling behavior, and hardware microarchitecture remain undocumented, means that third-party frameworks and open APIs cannot access the same optimization paths available to CUDA. NVIDIA’s end-user license agreement explicitly prohibits reverse engineering, further preventing independent developers or open-source communities from building equally efficient toolchains targeting the same hardware.

As a result, open and cross-platform APIs such as Vulkan or OpenCL often operate at a disadvantage on NVIDIA GPUs. Without direct access to the proprietary ISA or compiler optimizations, these APIs must rely on higher-level abstractions, generic compiler heuristics, and in some cases partial reverse engineering to achieve functional compatibility. This leads to measurable performance gaps: identical compute kernels frequently run slower in Vulkan than in CUDA, especially in workloads that rely on fine-grained memory control, thread synchronization, or shared-memory optimizations. In practice, this design ensures that CUDA remains the only environment capable of fully exploiting NVIDIA hardware efficiency – a deliberate trade-off that secures ecosystem dominance but limits openness, portability, and competitive parity for alternative GPU computing frameworks.

Benchmarks

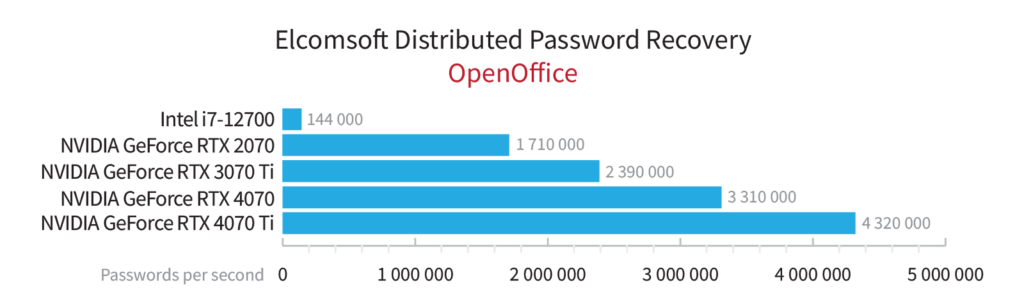

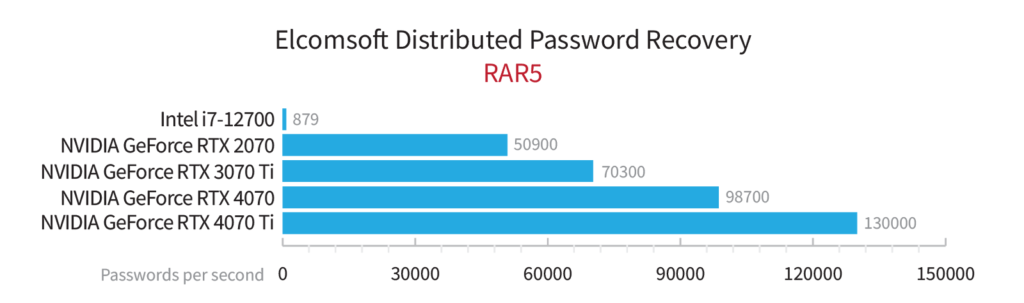

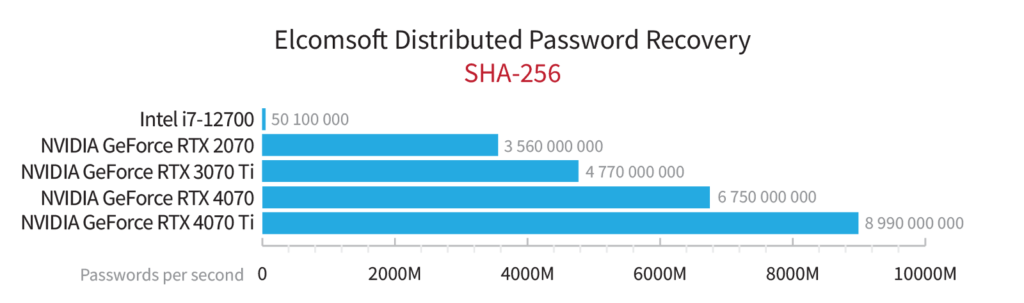

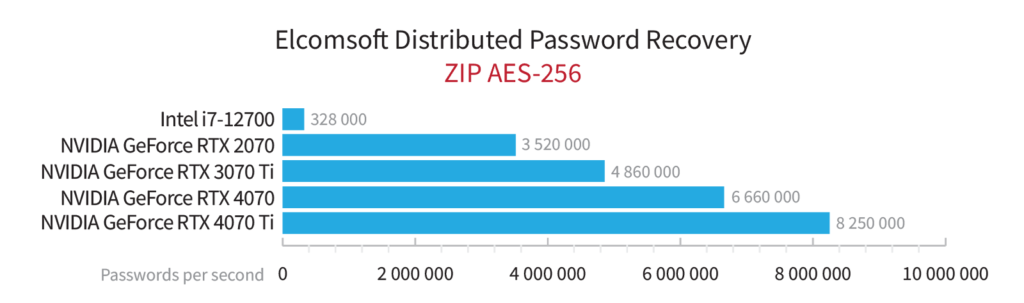

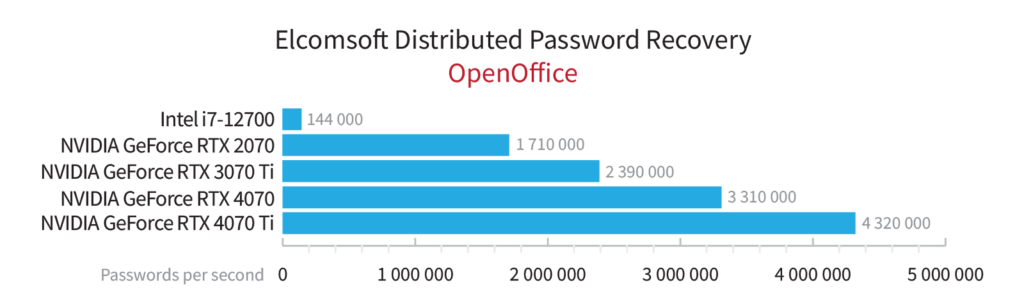

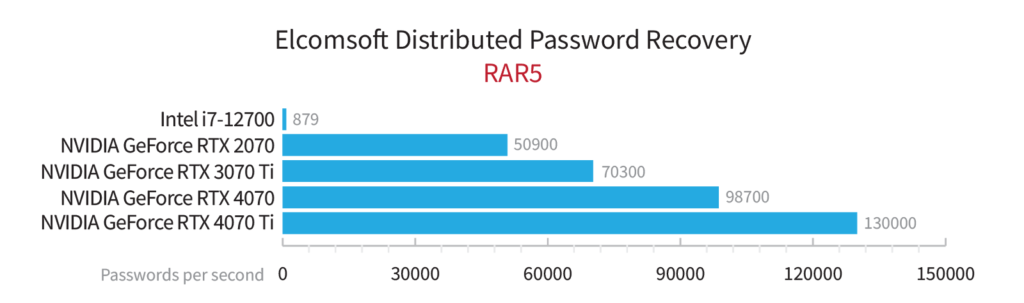

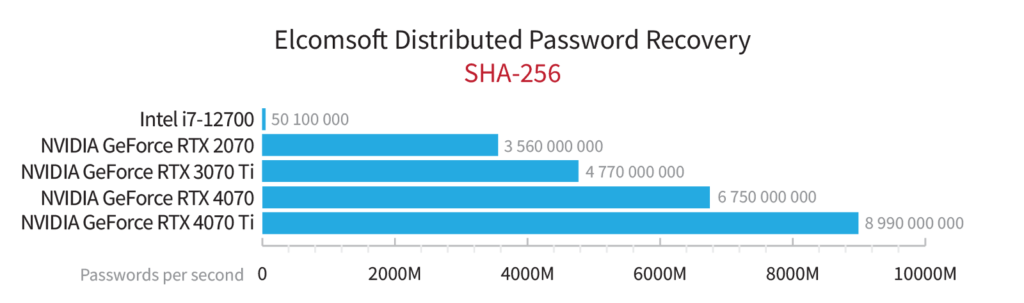

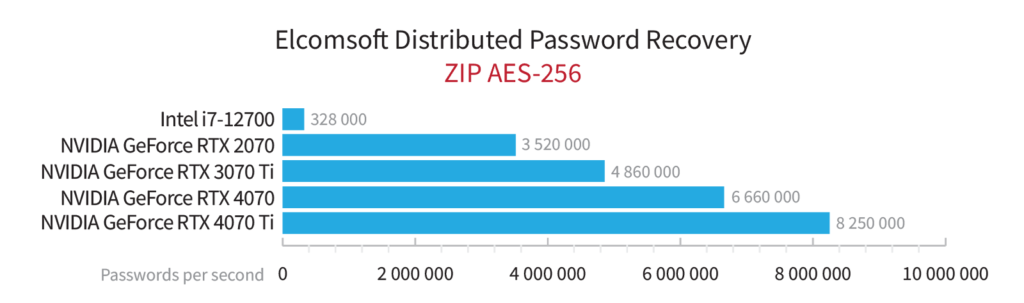

So how fast is “fast”? We’ve run a few benchmarks on the latest version of Distributed Password Recovery to demonstrate just how much this workload benefits from GPU acceleration.

Why Benchmarks Can Be Misleading

When applied to password recovery, GPU acceleration can deliver enormous performance gains – sometimes speeding up operations by a factor of 50, 100, or even more. However, such numbers can be misleading when taken at face value. While the GPU handles the most computationally intensive part of the workload, usually the repeated hashing steps, the CPU remains responsible for managing control flow, preparing input data, and processing results. Every password candidate must be generated, queued, and validated, and this coordination happens primarily on the CPU. As a result, the total speed of password recovery depends not only on the GPU’s raw power but also on how efficiently the slower CPU can keep the GPU supplied with data.

GPU acceleration is also highly dependent on the algorithm being attacked. Some hashing methods, such as MD5, SHA-1 or NTLM hashes (think Windows logon passwords), are well suited for parallel computation. They involve repetitive, predictable mathematical operations that map cleanly to GPU cores, resulting in substantial speedups – sometimes hundreds of times faster than CPU-only computation. Others, such as more complex key derivation functions or multi-stage encryption schemes, require frequent branching, conditional logic, or serial dependencies that do not parallelize effectively. In these cases, the GPU may provide only marginal benefits or none at all.

Certain modern algorithms are deliberately designed to resist GPU acceleration. AES-based encryption with frequent key changes or memory-hard algorithms such as scrypt and Argon2 demand large amounts of memory per thread, which GPUs typically lack. When each GPU core needs its own dedicated memory space, the hardware’s advantage in thread count disappears, leaving the CPU’s faster cores better suited for the task. For this reason, while GPU acceleration remains a vital tool in password recovery, its impact depends heavily on the underlying cryptographic design – and in some cases, hardware acceleration may deliver no practical advantage at all.

A major update is coming

We’re getting close: before the end of this year, we plan to roll out a major update to Distributed Password Recovery. The product has been completely rebuilt for a 64-bit architecture and now includes full support for the latest CUDA version, enabling seamless use of next-generation Blackwell-based accelerators. In real-world workloads, upgrading from an NVIDIA RTX 4090 to an RTX 5090 (Blackwell) is expected to deliver roughly a 1.5x boost in password recovery speed, depending on the format. Moving from an RTX 4080 to the same 5090 can provide up to a 2x performance increase.

Stay tuned. More details coming soon.

Eighteen years ago, Elcomsoft’s introduction of GPU acceleration for password recovery (and NVIDIA releasing CUDA) marked the beginning of a new era in applied cryptography and high-performance computing. Today, GPU acceleration drives progress in nearly every data-intensive field, from artificial intelligence and data analytics to video processing and scientific research. As GPU computing has advanced, so have the techniques designed to counter it. Memory-hungry algorithms such as scrypt and Argon2 deliberately limit parallel efficiency, ensuring that even the most powerful hardware cannot easily compromise modern encryption.

Eighteen years later, the balance between computational power and cryptographic resilience remains dynamic. GPU acceleration continues to evolve, becoming faster, more efficient, and more accessible than ever, while security researchers design algorithms that adapt to its strengths and limitations.